scaleph

The Scaleph project features data integration, develop, job schedule and orchestration and trys to provide one-stop data platform for developers and enterprises. Scaleph hopes to help peoples to aggregate data, analyze data, free data internal worth and make profit from them.

Scaleph is drived by personal interest and evolves actively through faithful developer, flowerfine is open and appreciates any helps.

Features

- Web-ui click-and-drag data integration ways backended by out-of-the-box connectors.

- Multiple versions, different deployment mode and different resource provider flink job execution ways, where we develop flinkful for solving these troubles.

- Job version management.

- Project configuration, dependency and resource.

Quick Start

Whenever people want to explore Scaleph system, they want a running Scaleph application, then people can interact with Scaleph through Scaleph Admin.

Luckily, deploy Scaleph locally just takes three steps.

- Make sure Docker installed on your machine.

- Clone the repository

- Use Docker Compose and Scaleph Docker image quickly install and run Scaleph.

git clone https://github.com/flowerfine/scaleph.git

cd scaleph/tools/docker/deploy

docker-compose up

Once all containers have started, the UI is ready to go at http://localhost!

Documentation

comming soon...

please refer wiki

Build and Deployment

- develop. This doc describes how to set up local development environment of Scaleph project.

- checkstyle. Scaleph project requires clean and robust code, which can help Scaleph go further and develop better.

- build. This doc describes how to build the Scaleph project from source. Scaleph adopts

mavenas its build system, for more information about build from source and deployment, please refer build. - docker. As more application runs in container on cloud then bare metal machine, Scaleph provides own image.

- deployment. For different deployment purpose such as develop, test or production, Scaleph make the best effort for people deploy project on local, docker and kubernetes.

RoadMap

features

- data ingress and egress.

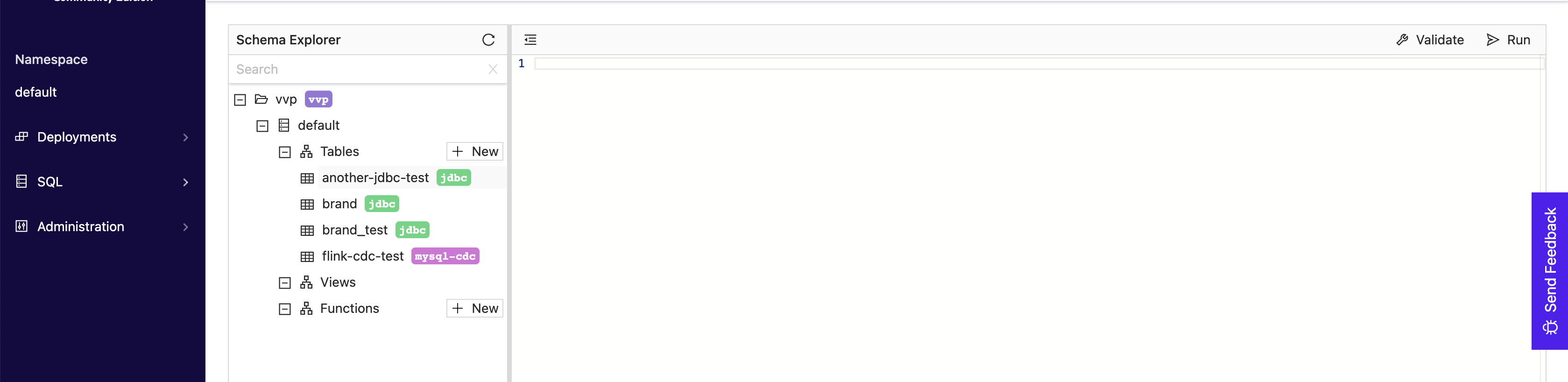

- data integration in flink way. Scaleph features

seatunnel,flink-cdc-connectorsand other flink connectors. - friendly to newbies web-ui.

- data integration in flink way. Scaleph features

- data develop

- udf + sql.

- support multi-layer data warehouse development.

- job schedule and orchestrate

architectures

- cloud native

- container and kubernetes development and runtime environment.

- flink operator

- seatunnel operator

- scaleph operator

- java 17, quarkus.

- container and kubernetes development and runtime environment.

- plugins. https://dubbo.apache.org/zh/docsv2.7/dev/principals/

Contributing

For contributions, please refer CONTRIBUTING

Contact

- Bugs and Features: Issues

License

Scaleph is licenced under the Apache License Version 2.0, link is here.