LMDB for Java

LMDB offers:

- Transactions (full ACID semantics)

- Ordered keys (enabling very fast cursor-based iteration)

- Memory-mapped files (enabling optimal OS-level memory management)

- Zero copy design (no serialization or memory copy overhead)

- No blocking between readers and writers

- Configuration-free (no need to "tune" it to your storage)

- Instant crash recovery (no logs, journals or other complexity)

- Minimal file handle consumption (just one data file; not 100,000's like some stores)

- Same-thread operation (LMDB is invoked within your application thread; no compactor thread is needed)

- Freedom from application-side data caching (memory-mapped files are more efficient)

- Multi-threading support (each thread can have its own MVCC-isolated transaction)

- Multi-process support (on the same host with a local file system)

- Atomic hot backups

LmdbJava adds Java-specific features to LMDB:

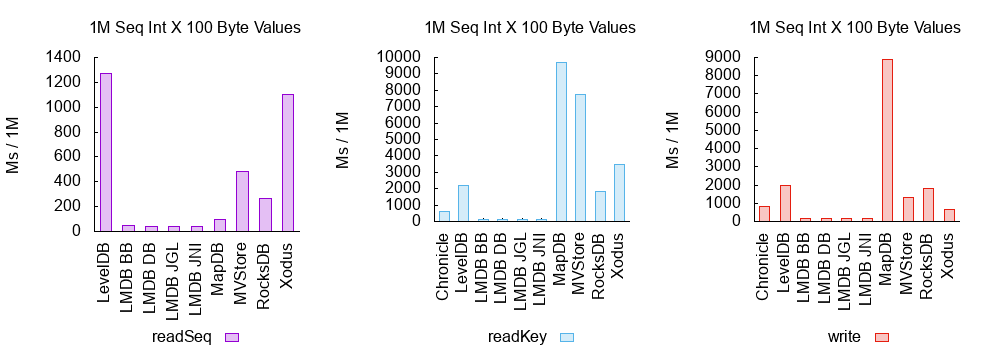

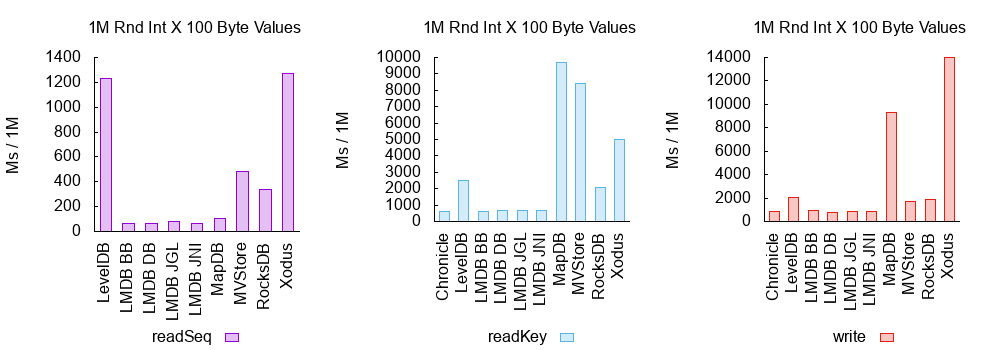

- Extremely fast across a broad range of benchmarks, data sizes and access patterns

- Modern, idiomatic Java API (including iterators, key ranges, enums, exceptions etc)

- Nothing to install (the JAR embeds the latest LMDB libraries for Linux, OS X and Windows)

- Buffer agnostic (Java

ByteBuffer, AgronaDirectBuffer, NettyByteBuf, your own buffer) - 100% stock-standard, officially-released, widely-tested LMDB C code (no extra C/JNI code)

- Low latency design (allocation-free; buffer pools; optional checks can be easily disabled in production etc)

- Mature code (commenced in 2016) and used for heavy production workloads (eg > 500 TB of HFT data)

- Actively maintained and with a "Zero Bug Policy" before every release (see issues)

- Available from Maven Central and OSS Sonatype Snapshots

- Continuous integration testing on Linux, Windows and macOS with Java 8, 11 and 14

Performance

Full details are in the latest benchmark report.

Documentation

Support

We're happy to help you use LmdbJava. Simply open a GitHub issue if you have any questions.

Contributing

Contributions are welcome! Please see the Contributing Guidelines.

License

This project is licensed under the Apache License, Version 2.0.

This project distribution JAR includes LMDB, which is licensed under The OpenLDAP Public License.