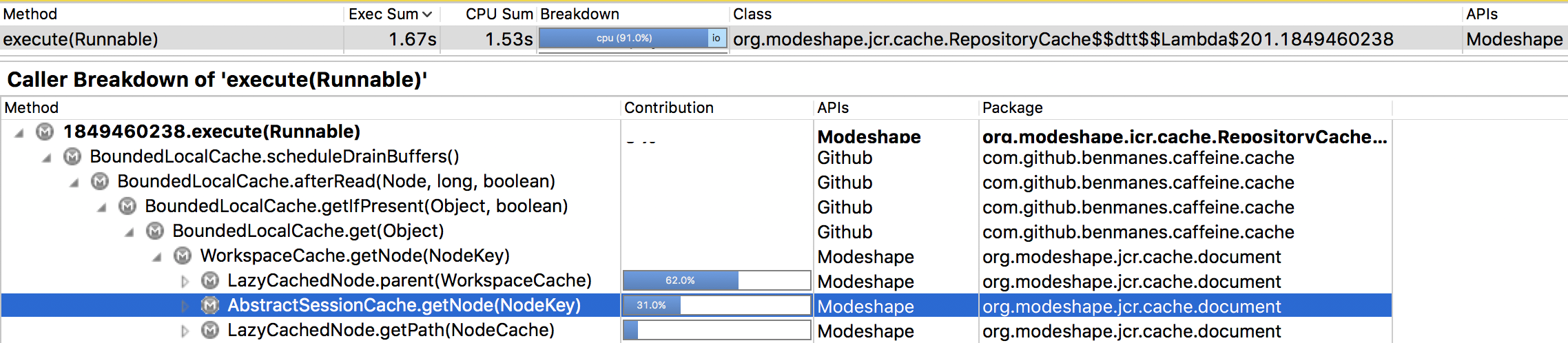

Caffeine is a high performance, near optimal caching library. For more details, see our user's guide and browse the API docs for the latest release.

Cache

Caffeine provides an in-memory cache using a Google Guava inspired API. The improvements draw on our experience designing Guava's cache and ConcurrentLinkedHashMap.

LoadingCache<Key, Graph> graphs = Caffeine.newBuilder()

.maximumSize(10_000)

.expireAfterWrite(5, TimeUnit.MINUTES)

.refreshAfterWrite(1, TimeUnit.MINUTES)

.build(key -> createExpensiveGraph(key));

Features at a Glance

Caffeine provides flexible construction to create a cache with a combination of the following features:

- automatic loading of entries into the cache, optionally asynchronously

- size-based eviction when a maximum is exceeded based on frequency and recency

- time-based expiration of entries, measured since last access or last write

- asynchronously refresh when the first stale request for an entry occurs

- keys automatically wrapped in weak references

- values automatically wrapped in weak or soft references

- notification of evicted (or otherwise removed) entries

- writes propagated to an external resource

- accumulation of cache access statistics

In addition, Caffeine offers the following extensions:

Use Caffeine in a community provided integration:

- Play Framework: High velocity web framework

- Micronaut: A modern, full-stack framework

- Spring Cache: As of Spring 4.3 & Boot 1.4

- Akka: Build reactive applications easily

- Quarkus: Supersonic Subatomic Java

- Scaffeine: Scala wrapper for Caffeine

- ScalaCache: Simple caching in Scala

- Camel: Routing and mediation engine

- JHipster: Generate, develop, deploy

Powering infrastructure near you:

- Dropwizard: Ops-friendly, high-performance, RESTful APIs

- Cassandra: Manage massive amounts of data, fast

- Accumulo: A sorted, distributed key/value store

- HBase: A distributed, scalable, big data store

- Apache Solr: Blazingly fast enterprise search

- Infinispan: Distributed in-memory data grid

- Redisson: Ultra-fast in-memory data grid

- OpenWhisk: Serverless cloud platform

- Corfu: A cluster consistency platform

- Grails: Groovy-based web framework

- Finagle: Extensible RPC system

- Neo4j: Graphs for Everyone

- Druid: Real-time analytics

In the News

- An in-depth description of Caffeine's architecture.

- Caffeine is presented as part of research papers evaluating its novel eviction policy.

- TinyLFU: A Highly Efficient Cache Admission Policy by Gil Einziger, Roy Friedman, Ben Manes

- Adaptive Software Cache Management by Gil Einziger, Ohad Eytan, Roy Friedman, Ben Manes

Download

Download from Maven Central or depend via Gradle:

implementation 'com.github.ben-manes.caffeine:caffeine:3.0.0'

// Optional extensions

implementation 'com.github.ben-manes.caffeine:guava:3.0.0'

implementation 'com.github.ben-manes.caffeine:jcache:3.0.0'

See the release notes for details of the changes.

Snapshots of the development version are available in Sonatype's snapshots repository.