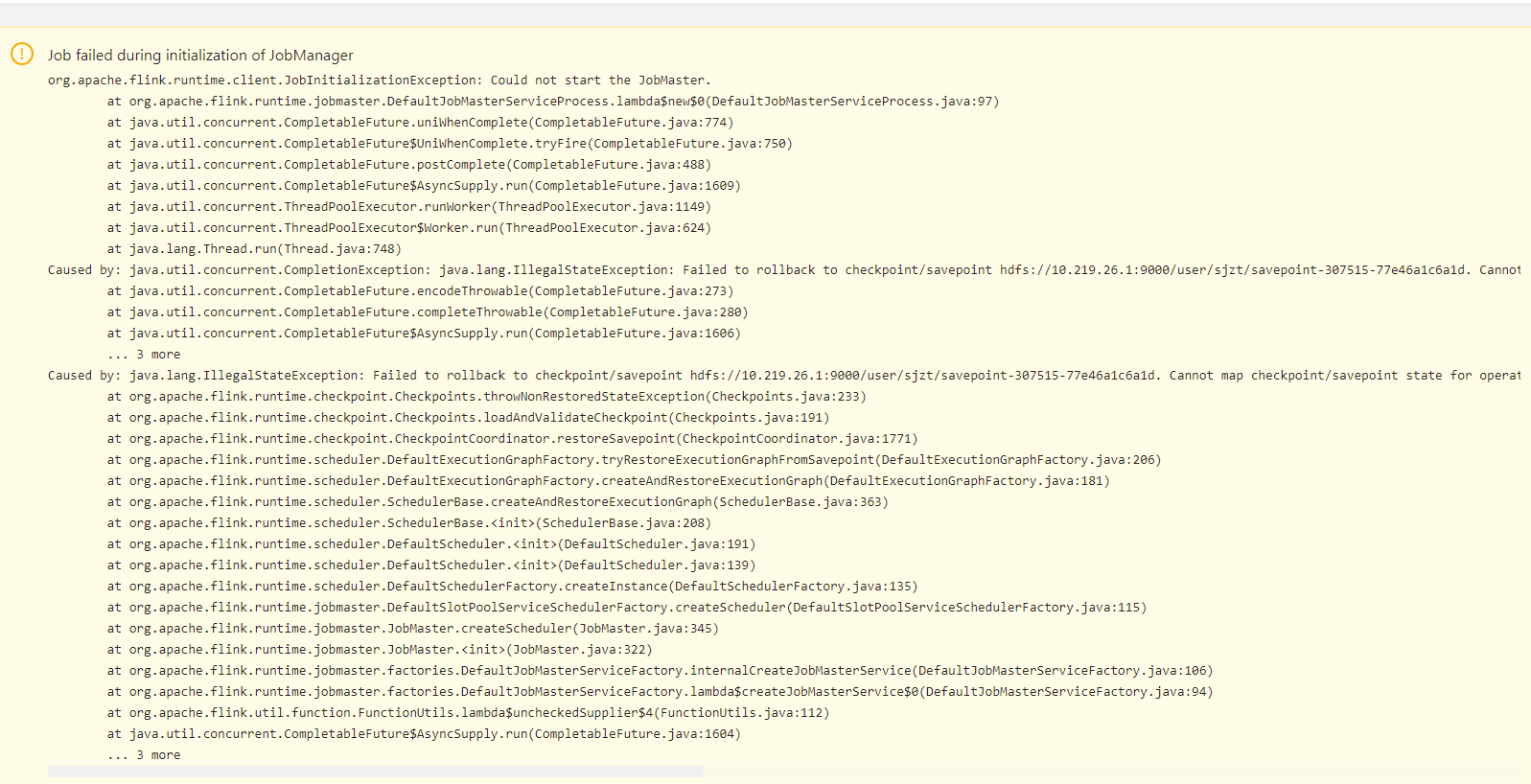

同样的任务,local模式下执行成功,flink sql client提交也成功,dlink提交报错,日志如下:

2022-03-21 20:18:18,249 ERROR org.apache.flink.runtime.entrypoint.ClusterEntrypoint [] - Fatal error occurred in the cluster entrypoint.

org.apache.flink.util.FlinkException: JobMaster for job bc81799a814721461992df5ae06bda8e failed.

at org.apache.flink.runtime.dispatcher.Dispatcher.jobMasterFailed(Dispatcher.java:892) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.dispatcher.Dispatcher.jobManagerRunnerFailed(Dispatcher.java:459) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.dispatcher.Dispatcher.handleJobManagerRunnerResult(Dispatcher.java:436) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.dispatcher.Dispatcher.lambda$runJob$3(Dispatcher.java:415) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_181]

at java.util.concurrent.CompletableFuture$UniHandle.tryFire(CompletableFuture.java:797) ~[?:1.8.0_181]

at java.util.concurrent.CompletableFuture$Completion.run(CompletableFuture.java:442) ~[?:1.8.0_181]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRunAsync(AkkaRpcActor.java:440) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:208) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:77) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:158) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:26) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:21) [flink-dist_2.11-1.13.6.jar:1.13.6]

at scala.PartialFunction$class.applyOrElse(PartialFunction.scala:123) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:21) [flink-dist_2.11-1.13.6.jar:1.13.6]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:170) [flink-dist_2.11-1.13.6.jar:1.13.6]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171) [flink-dist_2.11-1.13.6.jar:1.13.6]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.actor.Actor$class.aroundReceive(Actor.scala:517) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:225) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:592) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.actor.ActorCell.invoke(ActorCell.scala:561) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:258) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.dispatch.Mailbox.run(Mailbox.scala:225) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.dispatch.Mailbox.exec(Mailbox.scala:235) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.dispatch.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.dispatch.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.dispatch.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979) [flink-dist_2.11-1.13.6.jar:1.13.6]

at akka.dispatch.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107) [flink-dist_2.11-1.13.6.jar:1.13.6]

Caused by: org.apache.flink.runtime.client.JobInitializationException: Could not start the JobMaster.

at org.apache.flink.runtime.jobmaster.DefaultJobMasterServiceProcess.lambda$new$0(DefaultJobMasterServiceProcess.java:97) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:760) ~[?:1.8.0_181]

at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:736) ~[?:1.8.0_181]

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:474) ~[?:1.8.0_181]

at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1595) ~[?:1.8.0_181]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[?:1.8.0_181]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_181]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180) ~[?:1.8.0_181]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_181]

Caused by: java.util.concurrent.CompletionException: java.lang.RuntimeException: org.apache.flink.runtime.JobException: Cannot instantiate the coordinator for operator Source: TableSourceScan(table=[[default_catalog, default_database, vehicle_equipment_cdc]], fields=[id, tenant_id, is_active, create_date, modify_date, creator, modifier, terminal_code, video_terminal_code, device_type, device_brand, device_model, device_code, device_name, device_number, industry_code, protocol, is_exist_iot, iscanlock_status, application_id, application_name, application_phone, application_source, iscan_dangerous_operation, terminal_type, vehicle_model, inspection_date]) -> DropUpdateBefore -> NotNullEnforcer(fields=[id]) -> Sink: Sink(table=[default_catalog.default_database.vehicle_equipment_58mysql], fields=[id, tenant_id, is_active, create_date, modify_date, creator, modifier, terminal_code, video_terminal_code, device_type, device_brand, device_model, device_code, device_name, device_number, industry_code, protocol, is_exist_iot, iscanlock_status, application_id, application_name, application_phone, application_source, iscan_dangerous_operation, terminal_type, vehicle_model, inspection_date])

at java.util.concurrent.CompletableFuture.encodeThrowable(CompletableFuture.java:273) ~[?:1.8.0_181]

at java.util.concurrent.CompletableFuture.completeThrowable(CompletableFuture.java:280) ~[?:1.8.0_181]

at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1592) ~[?:1.8.0_181]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[?:1.8.0_181]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_181]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180) ~[?:1.8.0_181]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_181]

Caused by: java.lang.RuntimeException: org.apache.flink.runtime.JobException: Cannot instantiate the coordinator for operator Source: TableSourceScan(table=[[default_catalog, default_database, vehicle_equipment_cdc]], fields=[id, tenant_id, is_active, create_date, modify_date, creator, modifier, terminal_code, video_terminal_code, device_type, device_brand, device_model, device_code, device_name, device_number, industry_code, protocol, is_exist_iot, iscanlock_status, application_id, application_name, application_phone, application_source, iscan_dangerous_operation, terminal_type, vehicle_model, inspection_date]) -> DropUpdateBefore -> NotNullEnforcer(fields=[id]) -> Sink: Sink(table=[default_catalog.default_database.vehicle_equipment_58mysql], fields=[id, tenant_id, is_active, create_date, modify_date, creator, modifier, terminal_code, video_terminal_code, device_type, device_brand, device_model, device_code, device_name, device_number, industry_code, protocol, is_exist_iot, iscanlock_status, application_id, application_name, application_phone, application_source, iscan_dangerous_operation, terminal_type, vehicle_model, inspection_date])

at org.apache.flink.util.ExceptionUtils.rethrow(ExceptionUtils.java:316) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.util.function.FunctionUtils.lambda$uncheckedSupplier$4(FunctionUtils.java:114) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1590) ~[?:1.8.0_181]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[?:1.8.0_181]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_181]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180) ~[?:1.8.0_181]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_181]

Caused by: org.apache.flink.runtime.JobException: Cannot instantiate the coordinator for operator Source: TableSourceScan(table=[[default_catalog, default_database, vehicle_equipment_cdc]], fields=[id, tenant_id, is_active, create_date, modify_date, creator, modifier, terminal_code, video_terminal_code, device_type, device_brand, device_model, device_code, device_name, device_number, industry_code, protocol, is_exist_iot, iscanlock_status, application_id, application_name, application_phone, application_source, iscan_dangerous_operation, terminal_type, vehicle_model, inspection_date]) -> DropUpdateBefore -> NotNullEnforcer(fields=[id]) -> Sink: Sink(table=[default_catalog.default_database.vehicle_equipment_58mysql], fields=[id, tenant_id, is_active, create_date, modify_date, creator, modifier, terminal_code, video_terminal_code, device_type, device_brand, device_model, device_code, device_name, device_number, industry_code, protocol, is_exist_iot, iscanlock_status, application_id, application_name, application_phone, application_source, iscan_dangerous_operation, terminal_type, vehicle_model, inspection_date])

at org.apache.flink.runtime.executiongraph.ExecutionJobVertex.(ExecutionJobVertex.java:217) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.executiongraph.DefaultExecutionGraph.attachJobGraph(DefaultExecutionGraph.java:792) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.executiongraph.DefaultExecutionGraphBuilder.buildGraph(DefaultExecutionGraphBuilder.java:196) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.scheduler.DefaultExecutionGraphFactory.createAndRestoreExecutionGraph(DefaultExecutionGraphFactory.java:107) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.scheduler.SchedulerBase.createAndRestoreExecutionGraph(SchedulerBase.java:342) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.scheduler.SchedulerBase.(SchedulerBase.java:190) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.scheduler.DefaultScheduler.(DefaultScheduler.java:122) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.scheduler.DefaultSchedulerFactory.createInstance(DefaultSchedulerFactory.java:132) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.jobmaster.DefaultSlotPoolServiceSchedulerFactory.createScheduler(DefaultSlotPoolServiceSchedulerFactory.java:110) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.jobmaster.JobMaster.createScheduler(JobMaster.java:340) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.jobmaster.JobMaster.(JobMaster.java:317) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.internalCreateJobMasterService(DefaultJobMasterServiceFactory.java:107) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.lambda$createJobMasterService$0(DefaultJobMasterServiceFactory.java:95) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.util.function.FunctionUtils.lambda$uncheckedSupplier$4(FunctionUtils.java:112) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1590) ~[?:1.8.0_181]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[?:1.8.0_181]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_181]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180) ~[?:1.8.0_181]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_181]

Caused by: java.lang.ClassCastException: cannot assign instance of com.fasterxml.jackson.databind.util.LRUMap to field com.fasterxml.jackson.databind.deser.DeserializerCache._cachedDeserializers of type java.util.concurrent.ConcurrentHashMap in instance of com.fasterxml.jackson.databind.deser.DeserializerCache

at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2287) ~[?:1.8.0_181]

at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1417) ~[?:1.8.0_181]

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2293) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) ~[?:1.8.0_181]

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) ~[?:1.8.0_181]

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) ~[?:1.8.0_181]

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) ~[?:1.8.0_181]

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readArray(ObjectInputStream.java:1975) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1567) ~[?:1.8.0_181]

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) ~[?:1.8.0_181]

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) ~[?:1.8.0_181]

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) ~[?:1.8.0_181]

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) ~[?:1.8.0_181]

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) ~[?:1.8.0_181]

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:431) ~[?:1.8.0_181]

at org.apache.flink.util.InstantiationUtil.deserializeObject(InstantiationUtil.java:615) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.util.InstantiationUtil.deserializeObject(InstantiationUtil.java:600) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.util.InstantiationUtil.deserializeObject(InstantiationUtil.java:587) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.util.SerializedValue.deserializeValue(SerializedValue.java:67) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.operators.coordination.OperatorCoordinatorHolder.create(OperatorCoordinatorHolder.java:431) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.executiongraph.ExecutionJobVertex.(ExecutionJobVertex.java:211) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.executiongraph.DefaultExecutionGraph.attachJobGraph(DefaultExecutionGraph.java:792) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.executiongraph.DefaultExecutionGraphBuilder.buildGraph(DefaultExecutionGraphBuilder.java:196) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.scheduler.DefaultExecutionGraphFactory.createAndRestoreExecutionGraph(DefaultExecutionGraphFactory.java:107) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.scheduler.SchedulerBase.createAndRestoreExecutionGraph(SchedulerBase.java:342) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.scheduler.SchedulerBase.(SchedulerBase.java:190) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.scheduler.DefaultScheduler.(DefaultScheduler.java:122) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.scheduler.DefaultSchedulerFactory.createInstance(DefaultSchedulerFactory.java:132) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.jobmaster.DefaultSlotPoolServiceSchedulerFactory.createScheduler(DefaultSlotPoolServiceSchedulerFactory.java:110) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.jobmaster.JobMaster.createScheduler(JobMaster.java:340) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.jobmaster.JobMaster.(JobMaster.java:317) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.internalCreateJobMasterService(DefaultJobMasterServiceFactory.java:107) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.lambda$createJobMasterService$0(DefaultJobMasterServiceFactory.java:95) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at org.apache.flink.util.function.FunctionUtils.lambda$uncheckedSupplier$4(FunctionUtils.java:112) ~[flink-dist_2.11-1.13.6.jar:1.13.6]

at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1590) ~[?:1.8.0_181]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[?:1.8.0_181]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_181]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180) ~[?:1.8.0_181]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_181]

but,When I submit a task asynchronously, an error is reported

but,When I submit a task asynchronously, an error is reported

[dlink] 2022-08-16 17:59:52 CST WARN org.springframework.core.log.CompositeLog 127 warn - Failed to evaluate Jackson deserialization for type [[simple type, class com.dlink.model.Task]]: org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'com.fasterxml.jackson.datatype.jsr310.deser.LocalDateTimeDeserializer': Lookup method resolution failed; nested exception is java.lang.IllegalStateException: Failed to introspect Class [com.fasterxml.jackson.datatype.jsr310.deser.JSR310DateTimeDeserializerBase] from ClassLoader [org.springframework.boot.loader.LaunchedURLClassLoader@2b71fc7e]

[dlink] 2022-08-16 17:59:52 CST WARN org.springframework.core.log.CompositeLog 127 warn - Failed to evaluate Jackson deserialization for type [[simple type, class com.dlink.model.Task]]: org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'com.fasterxml.jackson.datatype.jsr310.deser.LocalDateTimeDeserializer': Lookup method resolution failed; nested exception is java.lang.IllegalStateException: Failed to introspect Class [com.fasterxml.jackson.datatype.jsr310.deser.JSR310DateTimeDeserializerBase] from ClassLoader [org.springframework.boot.loader.LaunchedURLClassLoader@2b71fc7e]